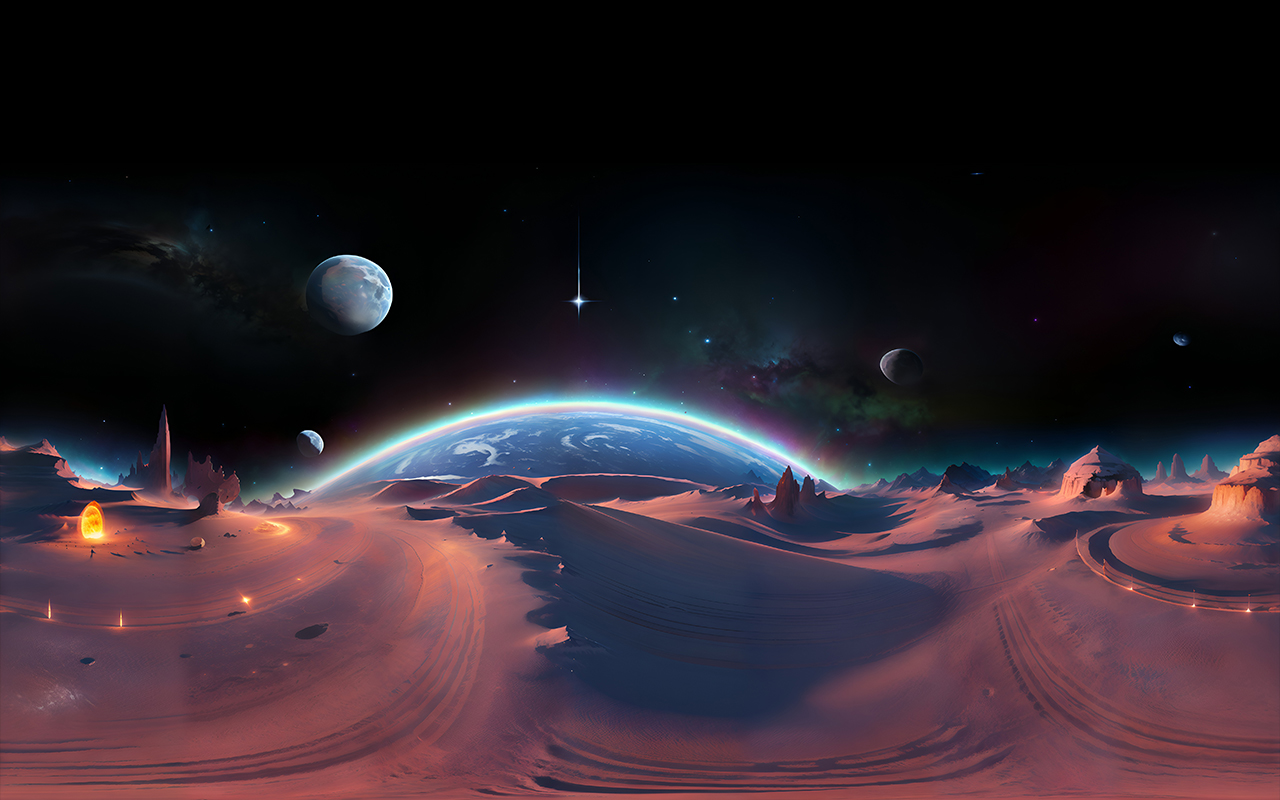

This 360 visual multi-modal experience is created through the synthesis of 2D footage and AI generated panorama to showcase the CHAPEA (Crew Health and Performance Exploration Analog) mission of NASA.

CHAPEA is a series of analog missions which simulates a one-year stay on the surface of Mars. This mission consists of four crew members living in a 3D printed habitat called as ‘Mars Dune Alpha’ solely designed to replicate the Mars-Realistic conditions. The aim of the mission is to gather the most accurate data on the variety of factors including physical and behavioural health and performance. CHAPEA is planned to consist of three analog missions. The first mission has begun on the 25th of June 2023. NASA through the CHAPEA mission creates a physical but also a visual space in the forms of 3D printing.

In this piece we wanted to exhibit CHAPEA project while experimenting with the interface format of the 360 video. This 360 video combines different elements: Artificial Intelligence, data sonification and conventional video footages. By combining 360 panorama with other elements, such as an informative video about CHAPEA project, we are looking for finding distinct forms of visual and multimodal narration.

The 2D informative video shown in this 360 piece displays the interior of the mission space habitat, which simulates the conditions of mars, that is, based on astronomical data. The 360 here, which we label as “AI generated speculative panorama”, is created through the Blockade Labs and Adobe Firefly AI image-generator. The objective here was to experiment and explore the capacities and the limits of the 360 video joint with AI generated design. The text prompts used for creating this extraterrestrial landscape were:

“Outer space” in realistic style (for generating the 360 landscape)

“Mars Rover Mission car”

“Space basecamp colony”

“Alien spaceship”

“Beautiful space with nebula”

Here, the creative limits of adding and changing the surroundings are endless, and can be added over different layers mixing sound, fixed image or videos. For instance, while the background sound might strike as being a generic sci-fi soundtrack, it is in fact NASA`s data sonification piece of the 5,000 Exoplanets which is incorporated to the experience. This assigns the outcome work to handle different sensory layers that can be combined to generate multimodal experiences. Subsequently, the immersive experience created to be seen as VR, we maintain, can be a medium of multimodal inquiry. In this precise piece, the multimodal inquiry is initiated through contrasting and combining the documentary footage as a reflection of the image and the speculative design as a reflection of the imagination.

In the future, we plan to work more on these 360 AI generated panoramas. We think they could be a modality to create a visual representation of the possible scenarios within the context of astronomical explorations, and within other research lines of the Visual Trust project (Scientific, Religious and Social images).

* This result belongs to task 3.1 (Images of the outerspace and the scientific aesthetics), for which the postdoctoral researcher Alkim Erol is responsible.

References:

Step inside NASA's 3D-printed Mars simulation habitat

5,000 Exoplanets: Listen to the Sounds of Discovery (NASA Data Sonification)

https://www.srisathyasaiglobalcouncil.org/sri-sathya-sai-baba

Back to the list